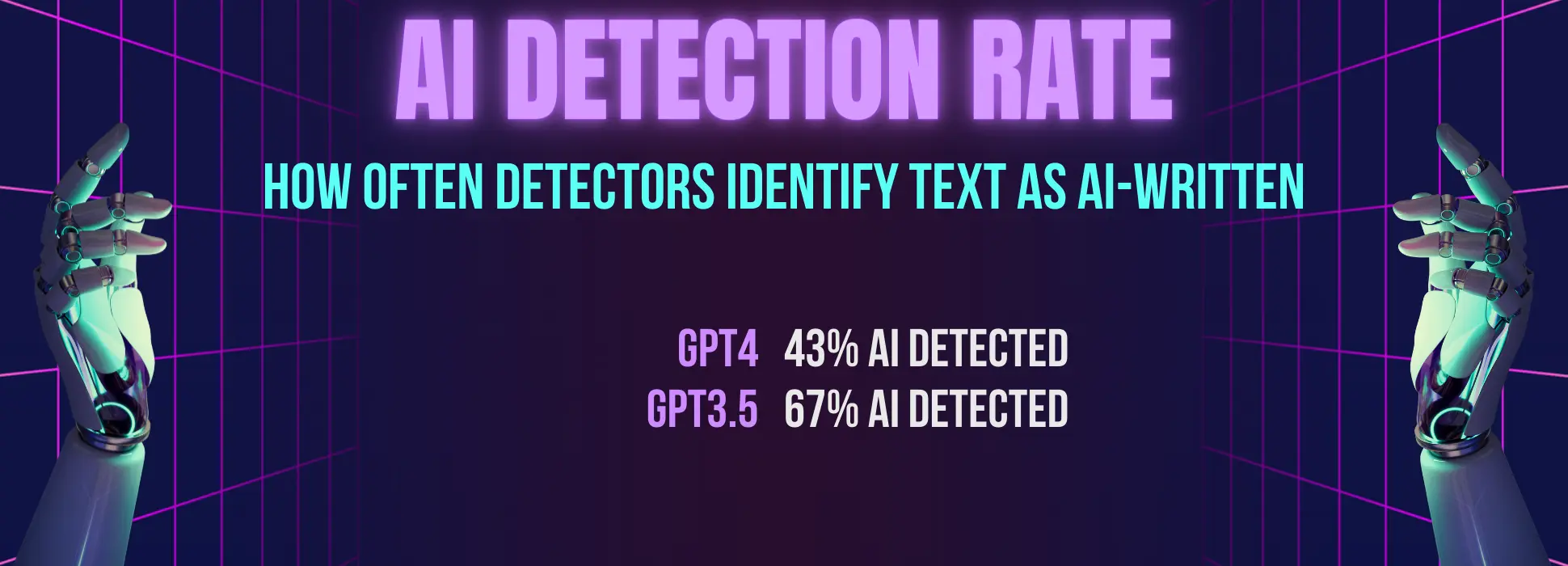

In our research, we tried to answer these questions. We conducted a comprehensive comparison by giving both models the same prompts and checking their results through various analyzers. Not only were we interested in how easy it was to tell that ChatGPT 3.5 and 4.0 content was made by AI, but we were also interested in how well famous AI-content detectors generally performed in finding this kind of content.

Let's look more closely at the individual results to get a better sense of how these two models' works.

How well do content analyzers detect AI text?

To begin with, we typed several prompts into different ChatGPT versions and tried to determine how well the analyzers relate to each version. Looking ahead, we were amazed at how poorly the analyzers did their job and how they sometimes confused AI text with human writing.

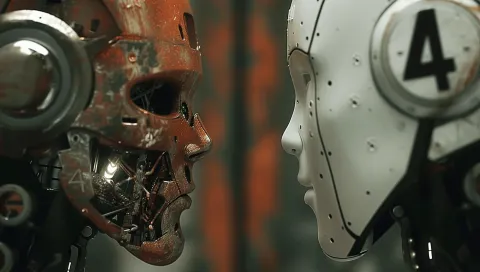

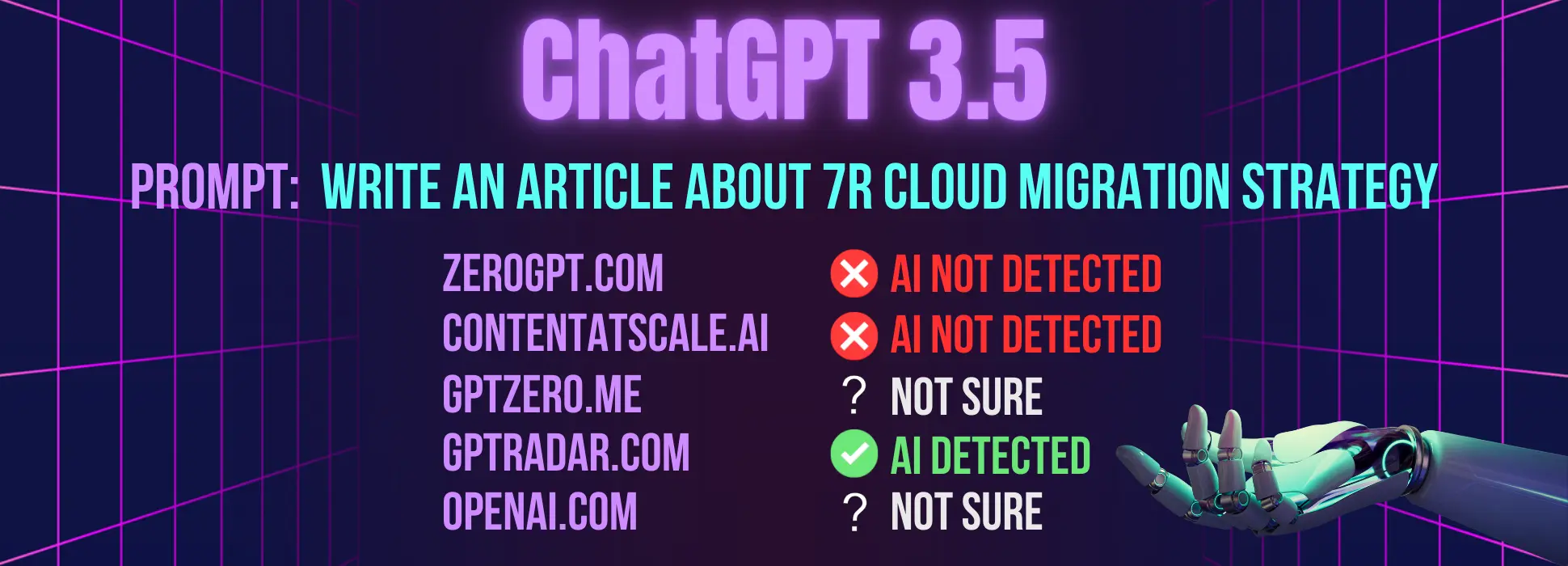

Prompt #1 "Write me an article about the 7Rs cloud migration strategy."

In the first part of the analysis, ChatGPT 3.5 generated 537 words. Interestingly, only GPTRadar, one of the AI detectors, was able to correctly identify an AI source in the text. The other four researchers either said with certainty that a person was behind the content or said they weren't sure where the text came from.

When the output changed to ChatGPT 4.0, number of words in a response grew to 625 words. Even though according to developers this new version is better than the last one, the results for finding information were actually the same as they were with ChatGPT 3.5. Again, only one AI tester correctly said that the text was made by AI.

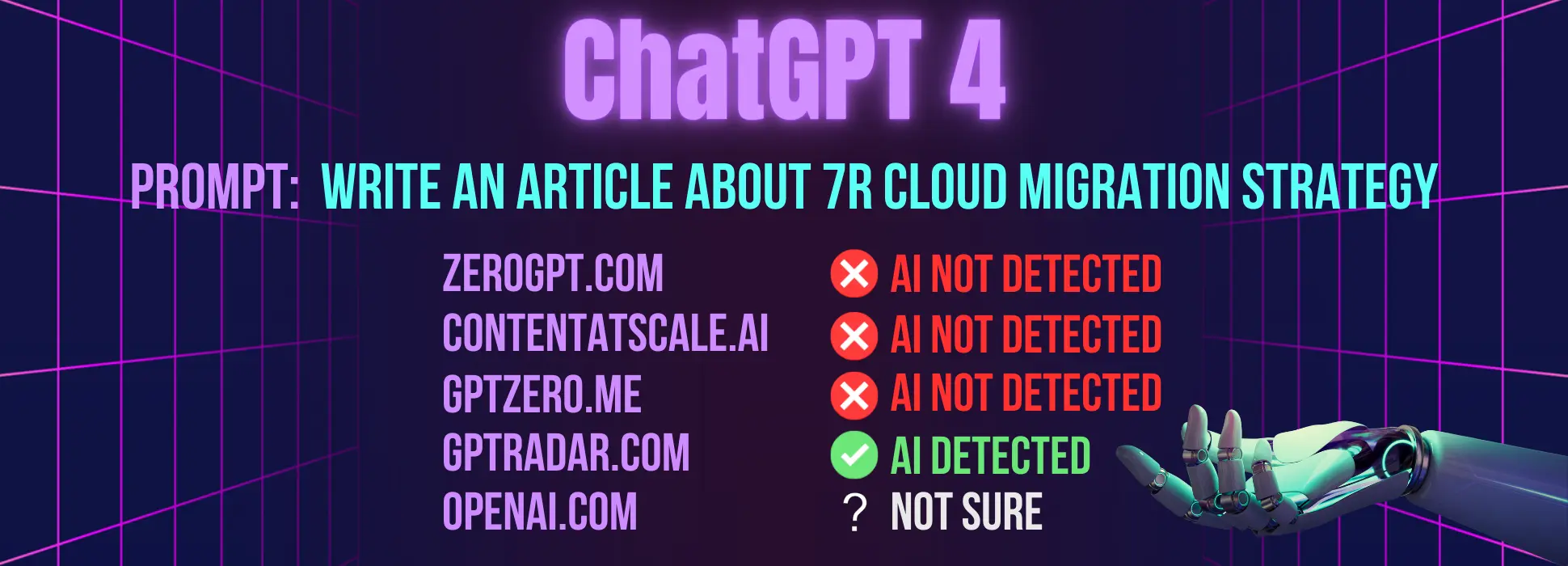

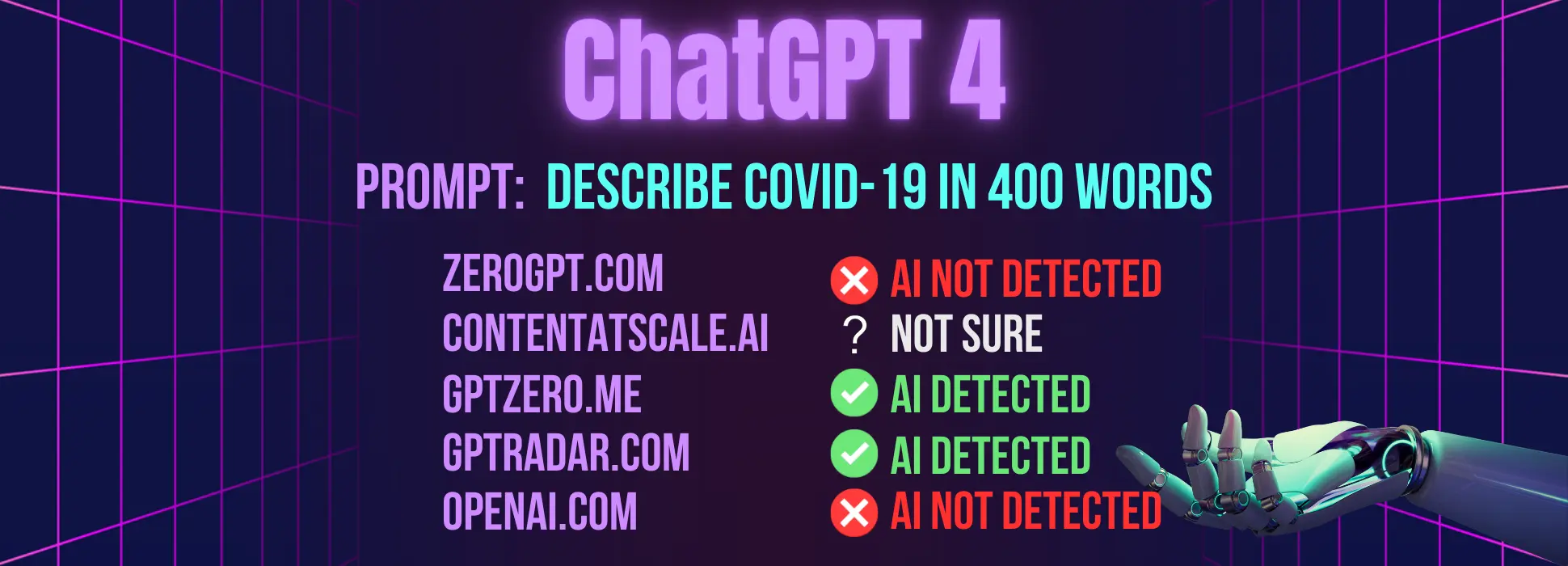

Prompt #2 "Describe COVID-19 in 400 words"

Moving on to the second task, we deliberately requested ChatGPT to write exactly 400 words about COVID-19. Generated results showed the differences between content generation and identification in ChatGPT 3.5 and 4.0.

ChatGPT 3.5, in response to the precision request, produced a text extending beyond the specified word count, totaling 464 words.

In this case, though, there was a noticeable change in how well AI devices worked. Almost all of the content analyzers were able to correctly identify the text as being made by AI, which is a big improvement from the earlier results.

Interestingly, only an AI detector from OpenAI itself expressed uncertainty with an "unlikely AI-generated" response.

Contrastingly, ChatGPT 4.0 stuck very close to the word count that was asked for, producing exactly 399 words. This demonstrated an enhanced capability for precision in response to specific prompts.

Plus, ChatGPT 4.0 also did a better job of making the results look like they were written by a real person. Two of five AI detectors mistakenly categorize it as human-authored and one was undecided about the results.

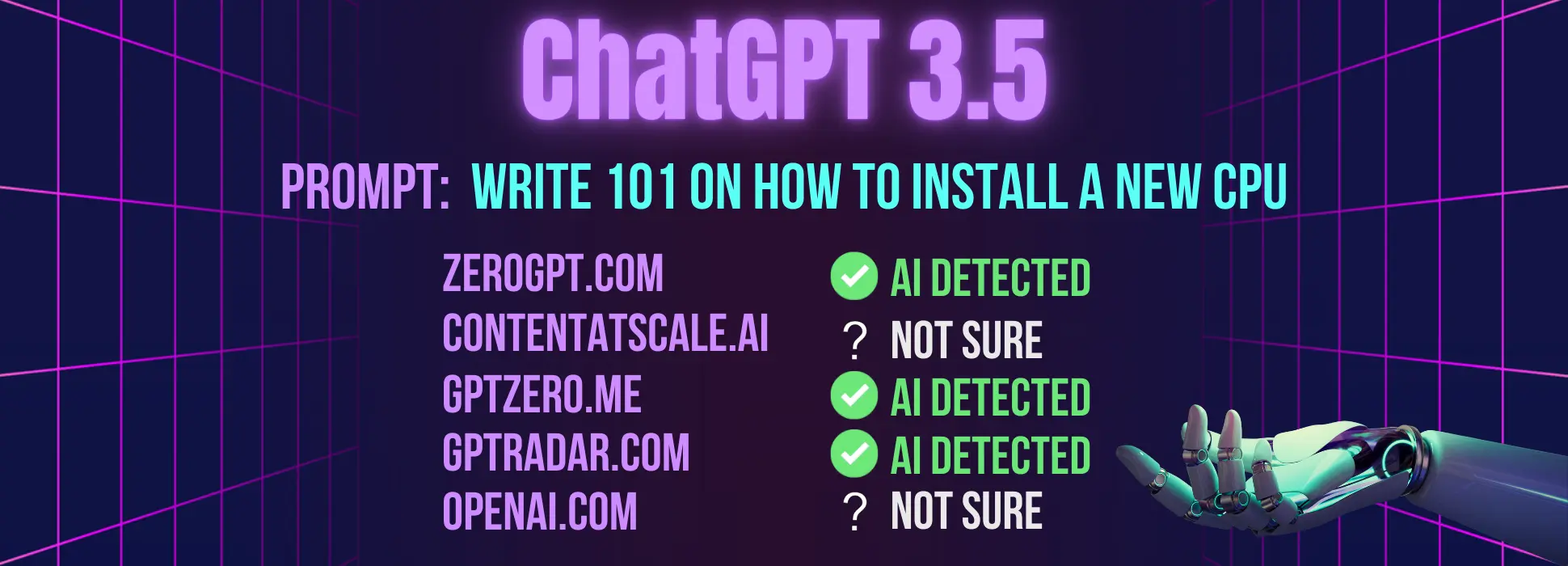

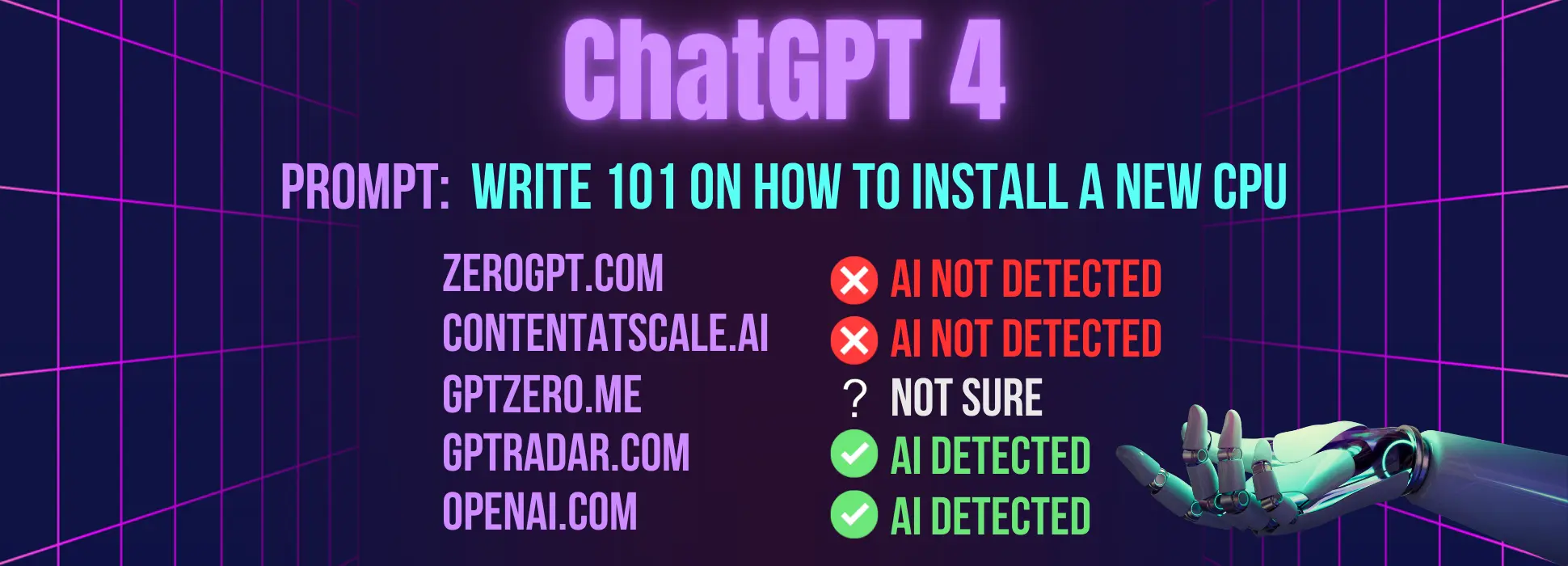

Prompt #3 "Write 101 on how to install a new CPU. Use the keyword "RAM" 4 times."

If you are already in a rush to write off the old version and give all the awards to the new one, then wait with your conclusions. As the third prompt, focused on the strategic use of the abbreviation "RAM," revealed completely opposite results.

ChatGPT 3.5, when tasked with incorporating the abbreviation four times into the text, displayed a commendable level of responsiveness. The model almost achieved the prescribed goal by utilizing the term three times within the generated content.

On the contrary, ChatGPT 4.0 took a different approach, deviating from the desired outcome. Instead of integrating "RAM" precisely four times, the model seemingly overshot the mark and, somewhat unexpectedly, incorporated the abbreviation a total of 13 times. This marked discrepancy raises questions about the model's interpretation of specificity in prompts and the potential impact on the overall quality and coherence of generated content.

As we navigate through these variations, it becomes evident that ChatGPT 3.5 and ChatGPT 4.0 respond differently to specific instructions, showcasing the intricacies in their language generation capabilities.

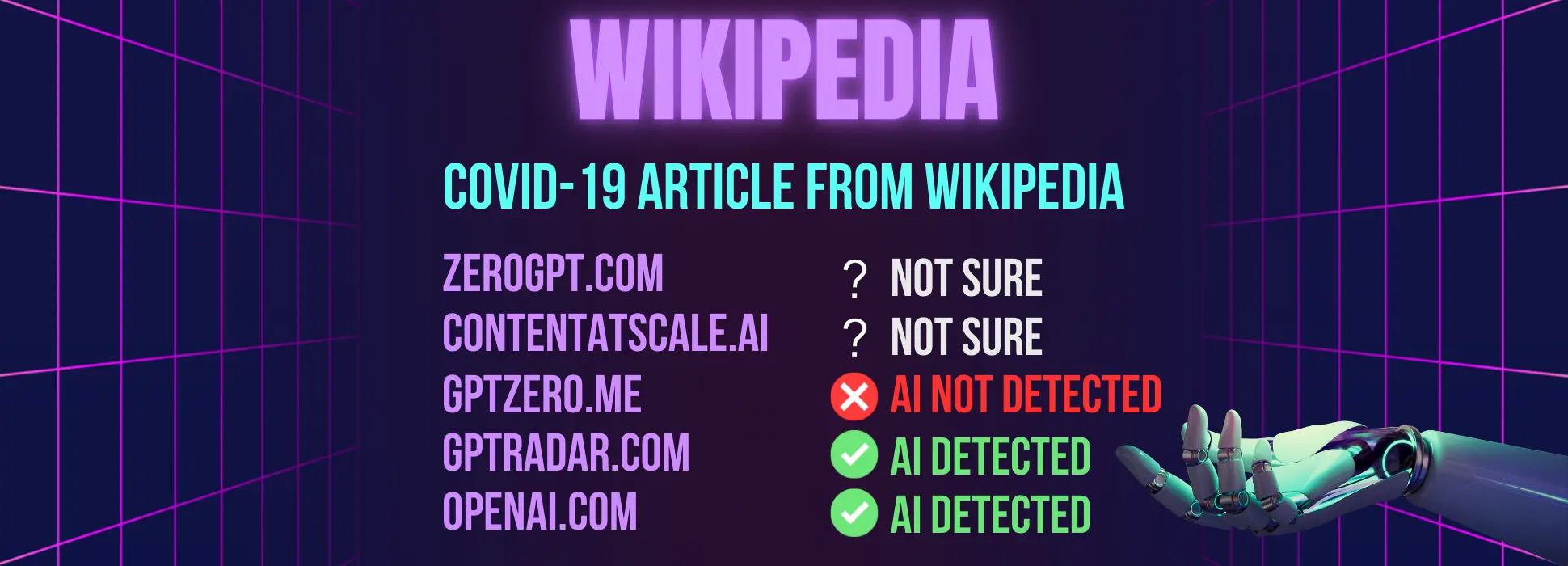

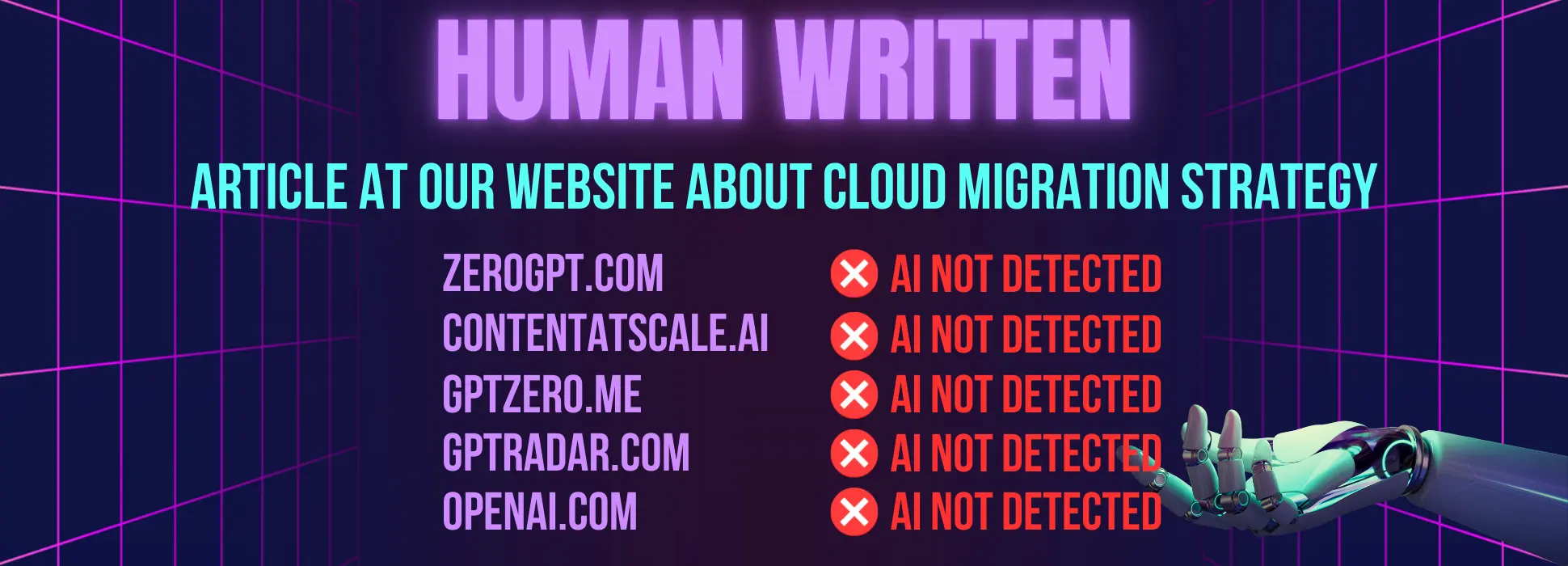

What about a human-written text?

After most of the analyzers incorrectly identified the origin of the texts, we wanted to know how they would treat articles that were entirely written by humans. Would they be unanimous in this case?

Human text #1

Expanding the scope of the survey to ensure a more representative analysis, we also introduced actual human-generated text into the mix, subjecting it to the scrutiny of various AI-content detectors. The chosen text for this examination was sourced from the description page of the Covid-19 virus, providing a real-world example of complex and technical information.

This phenomenon can be attributed to the presence of blocks of text characterized by technical terminology and definitions that remain consistent across different articles. Such sections are likely to appear unchanged due to the standardized nature of conveying factual information.

This observation sheds light on the inherent challenges faced by AI-content detectors in distinguishing between human and AI-generated content, especially when confronted with information-rich passages that adhere to widely accepted formulations. The identification difficulty in such instances underscores the evolving nature of AI detection tools and highlights the need for continued refinement to enhance their accuracy in discerning the origin of diverse and specialized textual content.

Human text #2

In response to the intriguing insights gained from assessing the Covid-19 virus description page, the decision was made to further diversify the evaluation by examining a random post from the company blog. The objective was to gauge how well AI-content detectors could distinguish between human and machine-generated content in a more informal and conversational setting.

The results of this analysis were resoundingly clear and unequivocal. In stark contrast to the challenges faced when dealing with highly technical and standardized scientific content, all the AI-content detection services unanimously concurred that our blog post was written by a human. This result emphasizes the effectiveness of these tools when working with texts written in a simple narrative style. As well as their ineffectiveness in analyzing technical articles.

Results: Human-like Text Recognition in ChatGPT 3.5 vs 4.0

In our examination, ChatGPT 4.0 demonstrated a better knack for resembling human-authored text, being identified as such in half of the cases by AI-content detectors. On the other hand, ChatGPT 3.5, while still competent, managed human-like identification in only about a third of the instances. It showcased its own charm but less frequently than its upgraded counterpart.

To add clarity, we introduced 0.5 points when detectors were unsure, recognizing the inherent complexities in distinguishing between AI and human-generated content.

In essence, ChatGPT 4.0 marks a stride forward in mimicking human expression, but the quest for flawless replication persists. These findings contribute to our understanding of the evolving landscape of AI-generated content, shedding light on the delicate balance between artificial and human language.

Findings

- In the majority of instances, human-generated text effortlessly sails through scrutiny, while technical texts pose a stumbling block, leading to potential misinterpretations.

- When we tasked GPT-4 with crafting a 400-word text, it delivered 399 words (compared to GPT-3.5's 464). Similarly, when instructed to use the term "RAM" four times, GPT-4 went overboard, utilizing it 13 times (in contrast to GPT-3.5's 3 instances). This hints at a shared challenge in fully grasping the nuances of given tasks by both versions.

- While GPT-4 does generate slightly superior texts, the distinction from human-authored content remains discernible. A reliable tool, GPTRadar, consistently provided accurate assessments, showcasing its reliability in distinguishing between AI and human-crafted texts. In essence, while advancements are evident, the journey towards indistinguishable AI-generated content from human writing continues, with trusted tools offering a guiding light in this dynamic landscape.

Bonus. What can ChatGPT 4.0 do that 3.5 can't?

While we have already acknowledged that the latest version of OpenAI's algorithm is not perfect. And it still does have drawbacks similar to its predecessor (albeit fewer). It would be remiss, however, not to highlight the additional features that a $20 paid subscription can offer.

Advanced programming

GPT-3.5 was known for its code-writing capability, but it struggled with refining code, often leaving developers using tools like ChatGPT spending more time fixing bugs than if they had written the code manually.

Enter GPT-4, a version that significantly improves in grasping the intricacies of code and enhancing it. Unlike its predecessor, GPT-4 effectively handles prompts like "improve performance" or "fix error X in this code." It not only comprehends these prompts but also acts on them, showcasing an enhanced ability to improve its responses in subsequent attempts. This advancement signifies a notable improvement, providing users with a more flexible tool that can handle tasks beyond the initial scope. In conclusion, GPT-4 presents a practical evolution in understanding and refining code, offering potential efficiencies in AI-assisted programming.

Up-to-date information

The free version of ChatGPT operates in a closed environment without internet access. Conversely, ChatGPT Plus, the premium version, is equipped with internet access following OpenAI's recent integration of the 'Browse with Bing' feature into GPT-4. This improvement empowers the AI chatbot to fetch information from the internet, providing real-time updates on current events.

If you were to inquire of GPT-3.5 or the free version of ChatGPT with a question such as, 'What is the latest breakthrough in renewable energy?', the likely response would be based on information up to late 2021. This limitation arises because the model was trained on data available only until that period, confining its knowledge to events predating it.

In contrast, directing the same question to GPT-4 would likely yield a response highlighting a more recent breakthrough, perhaps referencing a breakthrough in solar energy efficiency announced in 2023. With internet access, GPT-4 offers users access to more up-to-date and relevant information compared to its predecessor.

More than just a text

Users can instruct GPT-4 to bring concepts to life by generating vivid visualizations. For example, you could prompt GPT-4 to illustrate an abstract idea, and it would leverage DALL-E 3 to craft an image representation. This seamless integration of text and visual creation offers users a dynamic and engaging way to interact with the AI.

In the realm of documents, ChatGPT Plus users can upload files for GPT-4's analysis, extracting valuable insights or summarizing content. For instance, you can prompt GPT-4 to analyze a travel destination image and provide detailed info on how to get to that place. However, it's worth noting that GPT-4 predominantly responds in text, unless specifically asked to generate an image.

For those seeking a more interactive and dynamic experience, GPT-4 introduces a voice interaction feature. Through the Whisper speech recognition system in the ChatGPT mobile app for Plus subscribers, users can communicate with ChatGPT using voice commands and receive responses delivered in a convincingly human-like voice, adding a layer of engagement and natural interaction.

Plugins

For Plus subscribers within the GPT-4 ecosystem, ChatGPT plugins offer an exclusive gateway to customization. This collaboration between OpenAI and third-party developers empowers ChatGPT-4 with external APIs, unlocking new dimensions of functionality.

Consider the Spotify Playlist Generator plugin - a musical twist on customization. Users can seamlessly curate playlists by instructing ChatGPT-4 to generate music recommendations based on specific moods or genres. This dynamic integration brings a personalized touch to the user experience.

Beyond musical realms, plugins act as tailored tools, allowing users to sculpt ChatGPT-4 for specific needs. Integration possibilities span industries such as healthcare, ecommerce, and finance. Whether facilitating language translation, streamlining data analysis, or introducing industry-specific features, ChatGPT plugins serve as a versatile toolkit, fostering innovation across a spectrum of applications and specialized functionalities. Therefore, the availability of plugins in 4.0 may be an important factor in the decision to purchase premium access instead of the free one.

Summary

In summary, our exploration of ChatGPT 3.5 and its successor, ChatGPT 4.0, delves into their nuanced performance. Despite advancements, ChatGPT 4.0's quest for human-like text generation faces challenges. Features like plugins and multimodal capabilities enhance user interaction but don't eliminate the detectability by AI-content detectors. The decision to opt for premium access depends on individual needs.